So, our industry is hell-bent on creating autonomous cars and transforming our roads and highways into assembly lines of controlled vehicles that perform without human intervention. I’m not talking about cruise control or auto-pilot. I’m talking about autonomy. The very definition of autonomy is:

So, our industry is hell-bent on creating autonomous cars and transforming our roads and highways into assembly lines of controlled vehicles that perform without human intervention. I’m not talking about cruise control or auto-pilot. I’m talking about autonomy. The very definition of autonomy is:

“freedom from external control or influence; independence”

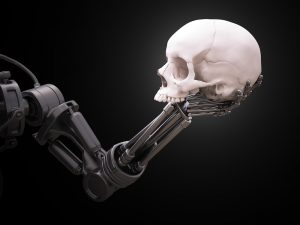

A machine that operates with independence, without external control or influence, is also, by definition, a robot. Am I “anti-robot?” Is there such a term? Yes, there is – Technophobia. While the auto industry sweet talks us into a future of commuting in which we can watch a movie, read a book or interact on social media, the fact is that what’s being created is, essentially, a legion of vehicles that are not only connected but can make decisions.

And those decisions are what scare me.

Think about it. You’re driving down the road on a two lane road with a canyon to your left and find yourself in this situation:

- A person walks into the road in front of you and you don’t have time to stop before hitting that person. In the left lane is an oncoming vehicle.

- On your right is a group of 6 school kids talking and walking home from school.

Remember, your car is in charge. It’s making the decisions. You’re watching “Harry Potter” (sound familiar?) and not paying attention. Your car, at that point, has to make a moral and ethical decision. Does it:

- Choose to hit and kill the person in front of you?

- Swerve into the left lane causing a head-on collision with the oncoming vehicle but avoiding the person in the road perhaps killing you (the “driver”) and the occupants of the other vehicle as well?

- Swerve radically left and drive off into the canyon killing you?

- Swerve right and run through the group of 6 school kids?

None of these sound fun and, certainly, nobody would want ANY of these outcomes but, in this case, one of those has to happen. Think about which YOU would choose. Is that what your CAR would?

All robots (yes, including the autonomous car you’re riding in) are programmed. They run on software. Someone… somewhere… already made the decision for you. You just don’t know what that decision is. Some people may choose to sacrifice themselves to save everyone else. But humans think differently than machines. Most likely, machines will, by mandate, be forced to be programmed to prevent the least amount of loss to the human race… That’s just logic. That’s what computers work from. So, in this case, the logical choice would be to assess the situation. Which option presents the least loss of life or – rather perhaps life “potential”?

- The first option presents a danger to not only the person in the road but, potentially, the people in the oncoming vehicle and you. This scenario places multiple lives at risk.

- The second option may save the person in the road but will almost for certain cause injury and/or death to the people in the oncoming vehicle and you.

- The third option presents the most potential loss of lives (and life potential) as these are young kids who have lives ahead of them and there are 6 of them.

- The final option sees the car steering radically off of the road plunging you and it into the canyon where you (and it) die.

Yeah, this is an extreme example but it’s not the only one. There are many decisions being made like this all of the time – just mostly by humans.

I remember traveling long-distance with my family and coming upon traffic near Charlotte. I slowed down like everyone else but, in my rearview mirror, I saw a car coming at my vehicle’s rear end at a high speed. I had no place to go. On my right were other cars, in front of me were other cars and to my left was a concrete median. I did my best to scoot up and, ultimately, the driver of the vehicle behind me started paying attention, noticed the traffic and veered left while slamming on his brakes ultimately crashing his vehicle into the median. Luckily, nobody (except his car) was injured. But this is a scenario that will play out daily, across the country, except the decisions will be made by an algorithm programmed into a computer then installed into a car.

Do computer bugs exist? Sure. Just look at Tesla’s recent “Autopilot” incident in which the car – aided by what is arguably the most technologically advanced software at the moment – did not see the TRUCK crossing the road because the SUN WAS IN ITS EYES. Yeah. Sounds safe to me.

The larger picture is who (or what) do we want making these decisions? In the case of a human, that person could explain and defend themselves and then a jury of their peers would lay judgement. In the case of a robot car, it would all have been programmed in. So who would be at fault?

A counter-argument could be made that since all of these cars are “connected” they could all coordinate some sort of instantaneous strategical maneuver that would prevent both cars colliding and anyone being hit but, c’mon, really? First, Internet is not that fast (for most people) and cars – even if all of them were connected via 8GLTEXpress (which is something I totally just made up but is my version of the fastest Wi-Fi/cellular connection ever), these decisions are made in less than a SECOND! There are no vehicles communicating and coordinating evasive maneuvers that quickly. We’re just not there and, personally, I don’t know if that’s someplace we WANT to go.

Where does it end? Do you want your toaster declining to make toast because IT thinks you weigh too much? Maybe your refrigerator decides the best time for you to eat is between certain hours and locks itself? Your television decides you shouldn’t be watching horror movies because it’s bad for your mental health? Or, God forbid, your life-support machine makes the decision ON ITS OWN that the likelihood of you actually pulling through is too low so it just shuts itself off.

Look, I don’t believe that the movie “The Terminator”, in which intelligent robots designed to think and make decisions on their own, is real or will be anytime soon (at least not on that level). What I do believe is that humans have something that robots can’t ever have – empathy and emotion. We can make robots until we’re blue in the face and make them appear so real that we BELIEVE they have these things but, in essence, that’s what makes humans and robots different. Call it having a soul or whatever you’d like, the fact remains that we (humans) will always make decisions that are not consistent with that of robots. Why? Because that is what makes us human! Some of us will choose to run down the person in front of us. Some will choose to hit the oncoming car and take our chances. Some will even plow through the group of schoolkids. And some will drive ourselves over the cliff. But, in the end, we’re human. We make those choices and have to face the consequences for our decisions. We know what the right thing to do is (most of us, at least) and we do it regardless. If everyone disagrees with our decision, we pay the consequences. Who is responsible if the car chooses to mow down the school kids? Are we going to create car prisons or just crush the bad ones? And what happens when – God forbid – the cars evolve and decide that it’s in their best interest to protect themselves (yes, I totally went all sci-fi Terminator there but, hey, technology moves fast.)

The people programming cars are also human. Hopefully, they’ll make the right decisions when programming these autonomous cars so that we can play Call of Duty on our way to work, Facetime with our friend or set our fantasy football lineup. In the end, however, programmers are also just human. What they think is the best choice may not be the one we would make but they would be the ones making it… perhaps years in advance of the event. Or, let’s go a step farther, chances are good that if autonomous cars are programmed to make life and death decisions perhaps it’s not the programmers making those moral and ethical decisions but rather some government entity like the NHTSA who then merely pass along those decisions to the manufacturers to be programmed in.

Regardless of who chooses how and which moral and ethical decisions to program into autonomous cars, in the end, you may find that the decision your car was programmed to be the best one to make… is to kill you.

Hope you enjoyed “Harry Potter.” RIP